Automation technologies – machines capable of performing productive tasks in place of human workers – have played an enormous role in the economic history of humanity since the Industrial Revolution. From the automation of textile production in the 19th century to the mechanisation of agriculture in the early 20th century, historical waves of automation drove huge sectoral reallocations of labour and helped spur urbanisation and massive social change. These waves of automation were far from perfectly benevolent in the short and medium run (Acemoglu and Johnson 2023), but ultimately contributed to immense growth in production and living standards in industrialised countries.

Between the 1970s and early 2020s, the story of automation in high-income countries remained fairly consistent (Autor 2015). Advances in machinery, the rise of computers, and the proliferation of digital technologies led to the gradual automation of ‘middle-skilled’ tasks ranging from factory-floor assembly-line tasks to clerical bookkeeping and accounting tasks (Autor et al. 2003). These tasks – consisting of discrete, formalisable sequences of steps – could increasingly be programmed into ever-cheaper computers and machines, displacing humans from many occupations.

These incremental waves of ‘routine-biased’ automation contributed to a widely discussed ‘polarisation’ of the labour market: middle-wage manufacturing and clerical jobs slowly melted away while new jobs appeared in low-wage cleaning, retail, and personal care occupations as well as high-wage managerial, technical, and professional occupations. As a consequence, wage and income inequality increased dramatically over this period, with demographic groups once concentrated in automation-stricken occupations falling behind (Acemoglu and Restrepo 2022) while higher-income professionals and capital owners pulled ahead (Moll et al. 2022).

Beginning in the 2010s, economists observed that the burgeoning field of machine learning could steer automation in a new direction. Previously, tasks could only be automated if they could be broken down into explicit sequences of steps that could be formally explained to a computer or machine. Many tasks that required creativity or tacit, hard-to-formalise knowledge –from writing to medical diagnosis to graphic design – hence avoided automation. But in the 2010s, economists noted that emerging ‘deep learning’ techniques, which trained computers inductively on large existing datasets rather than providing explicit instructions, might eventually permit automation of even creative or tacit-knowledge-reliant tasks.

The first wave of machine-learning-based automation technologies targeted ‘predictive’ tasks such as bail decisions, hiring decisions, or medical diagnoses (Kleinberg et al. 2018, Chalfin et al. 2016, Mullainathan and Obermeyer 2022). Machine-learning algorithms became increasingly good at making binary predictions from high-dimensional input data, prompting concerns about the future of occupations like radiology. But creative tasks still seemed securely insulated from the threat of automation.

This changed with the public release of impressive ‘generative’ artificial intelligence systems in mid-to-late 2022. Trained using deep-learning techniques to generate large coherent bodies of text or well-produced images in response to written prompts, these systems were substantially more capable than any pre-existing chatbot or image generation tool. For the first time, it appeared that creative writing or design tasks might face imminent widespread automation.

In a recent paper (Noy and Zhang 2023), we report the results of an online experiment we conducted that provide a first look at the potential productivity and labour market impacts of text-based generative AI systems, specifically ChatGPT 3.5.

Experimental design

We conducted the experiment on Prolific, a survey platform that is a mainstay of academic social science research. We screened tens of thousands of respondents on the platform to identify a subset of college-educated respondents in our occupations of interest – managers, human resource professionals, grant writers, marketers, consultants, and data analysts – which were chosen based on our ability to come up with realistic, occupation-specific, 20¬–30 minute writing tasks that we could administer through an online survey. Managers and HR professionals were assigned to write a sensitive email, marketers to write a press release for a hypothetical product, grant writers to write a grant application, consultants to write a short report, and data analysts to write an analysis plan. About 85% of participants rated the tasks as “realistic” or “very realistic” imitations of real tasks performed in their occupations.

Prolific respondents who passed our screening stage were invited to complete an hour-long survey involving two occupation-specific writing tasks. Participants were paid a base rate of $10 and were heavily incentivised to perform well on the tasks: their task submissions were graded by other Prolific respondents working in the same occupations, and they received up to $14 in bonus payments based on their grades. The average total payment in our sample was $17/hour, significantly exceeding the typical $12/hour on Prolific. Our combination of above-market pay and high-powered incentives successfully elicited substantial effort from participants, who spent an average of 27 minutes on the first task.

Between the first and second tasks, participants were randomised into a treatment or control group. Treated participants were told to sign up for ChatGPT and enter several sample prompts, showing them how to use the technology. Control participants were told to sign up for Overleaf (to keep survey time as similar as possible between treatment and control and minimise selective attrition, almost no control participants used Overleaf on the second task). Treated participants were told they were permitted to use ChatGPT on the second task if they found it helpful.

Results

The treatment group overwhelmingly chose to use ChatGPT on the second task: 87% of those who successfully signed up for an account used it. Treated participants were very impressed with the technology, giving it an average usefulness score of 4.4 out of 5.0. Almost all users simply pasted the task prompt into ChatGPT and submitted an unedited or lightly edited version of its output. Contrary to expectations, few participants chose to use ChatGPT in other ways, such as using it to edit their own draft, to brainstorm ideas, or to write a rough draft before heavily editing its output.

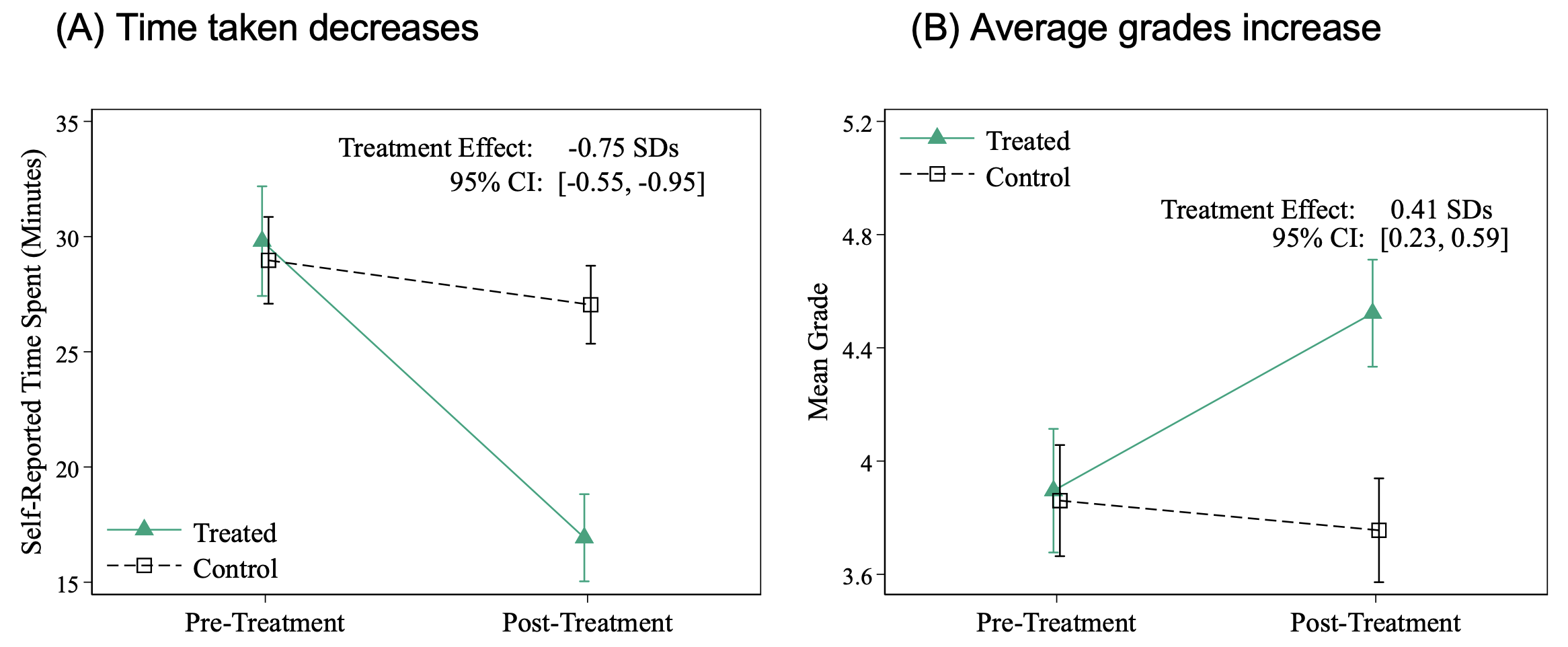

Consequently, time spent on the second task dropped precipitously for the treatment group compared to the control group on the second task, decreasing by 40% (Figure 1 Panel A). Average grades rose by 18% (Figure 1 Panel B). The increase in grades largely reflected graders’ high opinion of pure-ChatGPT output compared to pure-human output, and does not seem to have reflected any value-added from the treated participants themselves.

Figure 1 Productivity effects

Why did the participants do so little editing of ChatGPT’s output? One possibility is that they recognised clear deficiencies in the output or areas of potential improvement, but wanted to rush through the task as quickly as possible. Under this interpretation, participants were simply using ChatGPT as a time-saving device and ignoring its output quality, reducing the external validity of our experiment to the higher-stakes real world.

Three pieces of evidence contradict this interpretation. First, 40% of our participants were cross-randomised into a ‘convex’ incentive scheme that promised them a substantial additional bonus payment for receiving a high grade of 6 or 7 out of 7. This provided an extra incentive to fix or improve ChatGPT’s raw output, yet respondents in this group spent no more time editing on average than respondents in our main ‘linear’ incentive group, and did not receive higher grades. Second, respondents who did choose to edit (or spent longer editing) did not receive higher grades than those who submitted unedited output. Third, many respondents clearly judged that ChatGPT was an output-improving device in addition to a time-saving device. At the end of the survey, some treated respondents were given an opportunity to revise or replace their pre-treatment task submission using ChatGPT; 19% fully replaced their entry with ChatGPT’s output and a further 17% used ChatGPT as an editor. Our overall interpretation is that participants saw ChatGPT’s output as high-quality and lacking obvious areas of improvement.

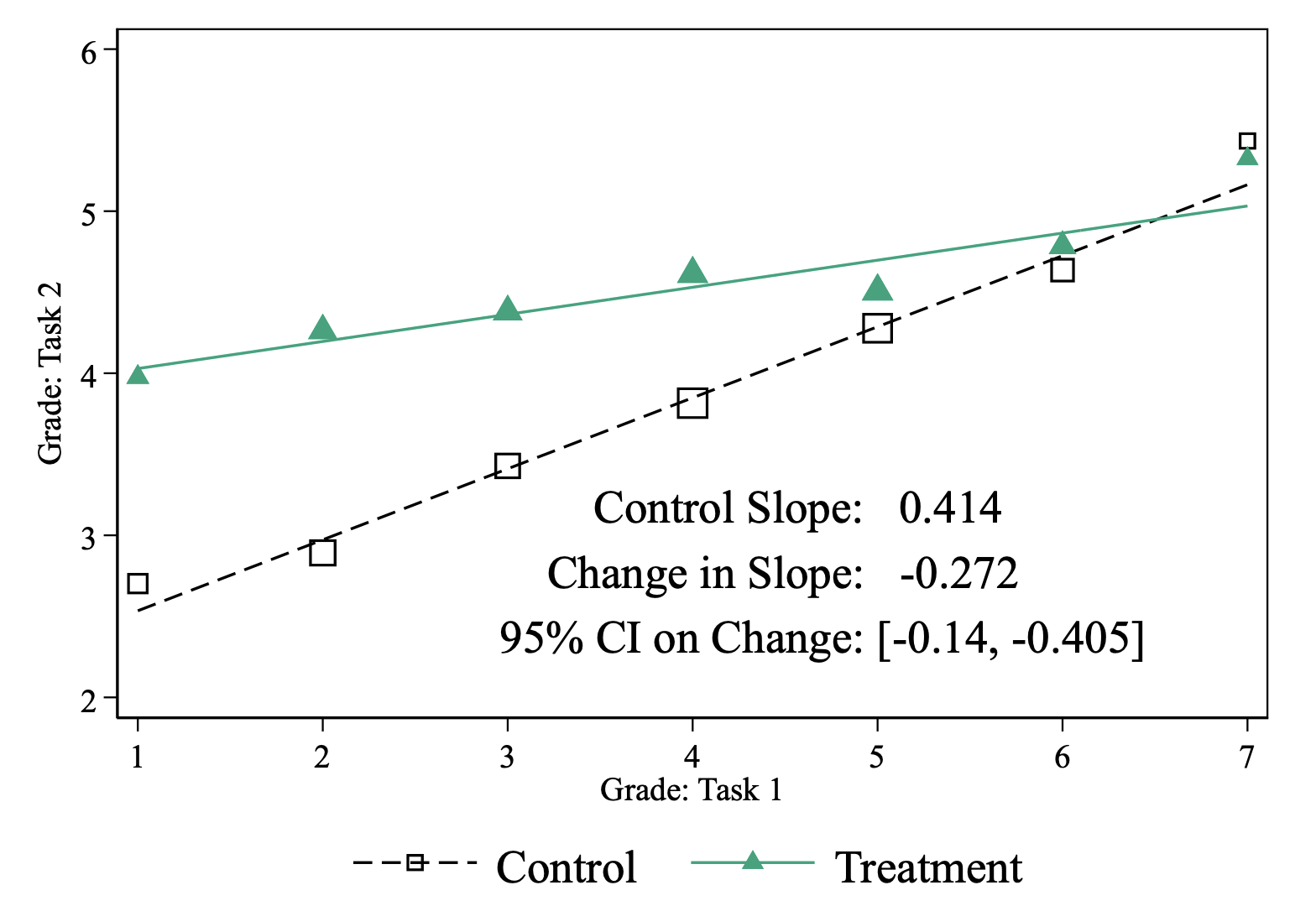

As a consequence of broadly uniform usage of ChatGPT in the treatment group, inequality in productivity between participants shrank dramatically, as shown in Figure 2. ChatGPT access allowed nearly everyone in the treated group to perform as well as the top humans in the control group.

Figure 2 Grade inequality decreases

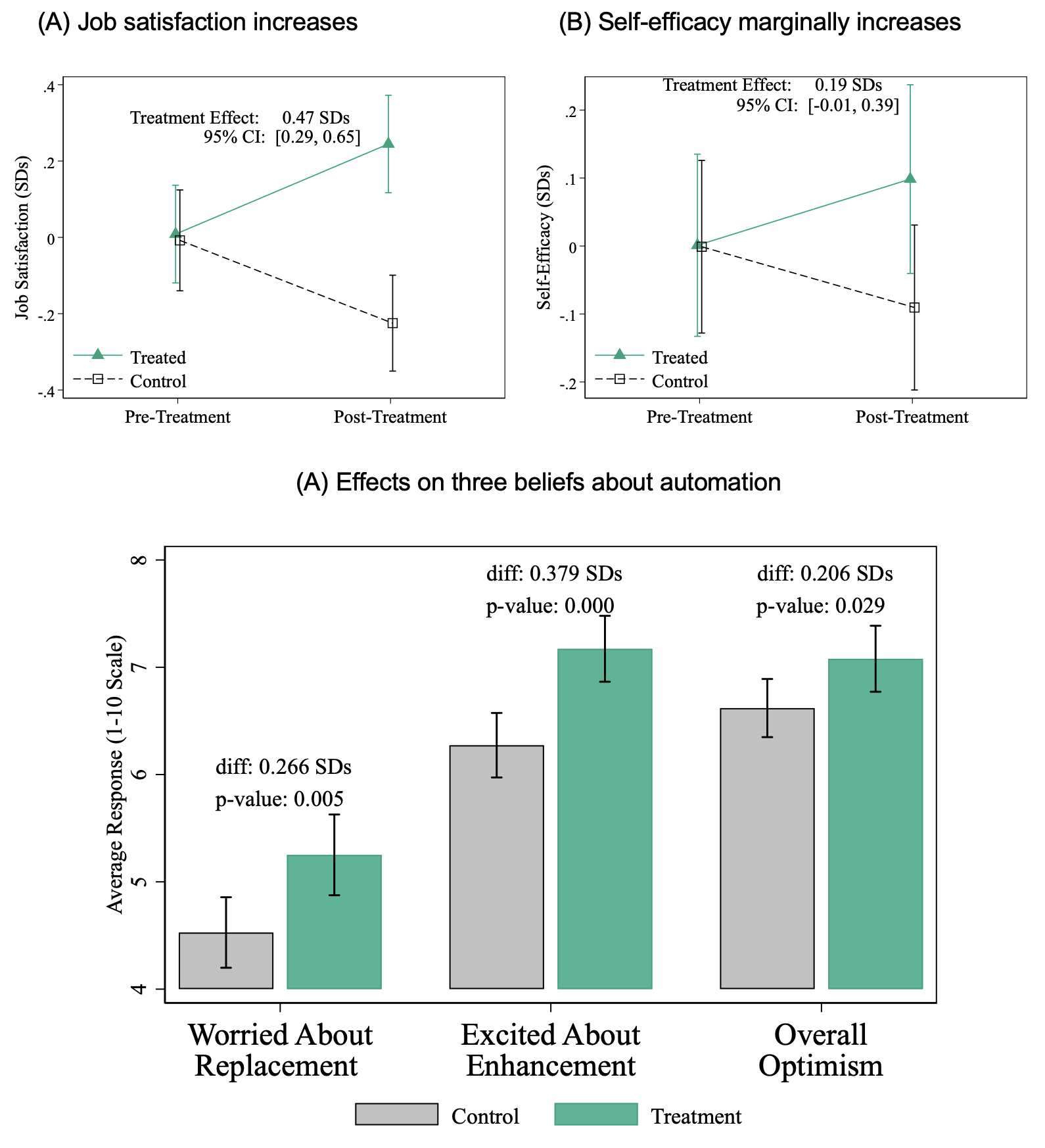

How did participants react to being introduced to this startlingly productive technology? We asked participants about their enjoyment of each task; as Figure 3 Panel A shows, enjoyment rose by 0.5 standard deviations in the treatment group compared to the control group. Participants’ concerns about AI displacing workers in their occupation rose in the treatment group, as did excitement about AI augmenting workers in their occupation, while overall optimism about AI rose slightly. Respondents therefore greeted the technology enthusiastically overall, but not without trepidation. These gaps disappeared in subsequent resurveying.

Figure 3 Job satisfaction, self-efficacy, and beliefs about automation

We resurveyed participants two weeks and then two months after the experiment to track diffusion of ChatGPT into their real jobs. Two weeks out, 34% of treated and 18% of control respondents had used ChatGPT in their job in the past week; two months out, these figures were 42% and 27%. The slow increase in usage and persistent treatment-control gap suggest that diffusion of ChatGPT into real-world jobs remains somewhat slow and hampered by information frictions. Respondents not using ChatGPT in their main job reported a mix of reasons: lack of familiarity, lack of access at work, or lack of usefulness of ChatGPT due to the importance to their work of context-specific knowledge and style.

Conclusion

ChatGPT has a substantial impact on productivity in mid-level professional writing tasks, increasing speed and quality and narrowing the gap between higher- and lower-ability writers. Its aggregate impacts, however, will depend on complex general-equilibrium considerations that our experiment is unable to speak to. As we discuss in the paper, a number of factors – ranging from the elasticity of demand for ChatGPT-relevant services, the particular skills ChatGPT best complements, and the nature of optimal production structures with ChatGPT – will determine the impacts of ChatGPT-like technologies on employment, occupation, and wage structures.

References

Acemoglu, D and P Restrepo (2022), “Tasks, Automation, and the Rise in US Wage Inequality”, Econometrica 90(5).

Acemoglu, D and S Johnson (2023), Power and Progress: Our 1000-Year Struggle Over Technology and Prosperity, New York: Public Affairs.

Autor, D, F Levy and R Murnane (2003), “The Skill Content of Recent Technological Change: An Empirical Exploration”, Quarterly Journal of Economics 118(4).

Autor, D (2015), “Why Are There Still So Many Jobs? The History and Future of Workplace Automation”, Journal of Economic Perspectives 29(3).

Chalfin, A, O Danieli, A Hillis, Z Jelveh, M Luca, J Ludwig and S Mullainathan (2016), “Productivity and Selection of Human Capital with Machine Learning”, American Economic Review 106(5).

Kleinberg, J, H Lakkaraju, J Leskovec, J Ludwig and S Mullainathan (2018), “Human Decisions and Machine Predictions”, Quarterly Journal of Economics 133(1).

Moll, B, L Rachel and P Restrepo (2022), “Uneven Growth: Automation’s Impact on Income and Wealth Inequality”, Econometrica 90(6).

Mullainathan, S and Z Obermeyer (2022), “Diagnosing Physician Error: A Machine Learning Approach to Low-Value Healthcare”, Quarterly Journal of Economics 137(2).

Noy, S and W Zhang (2023), “Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence”, working paper.